Saturday, February 9, 2008

Success with Random Variables

In the last entry, I noted that at least part of the challenge is that there are so many different types of functions that appear. But I think that a significant issue comes back to confusion about what random variables represent and how they relate to questions that are posed. Recall that a random variable summarizes some aspect of a random experiment as a single number. (We will soon generalize to the ability to summarize with multiple random variables.) Typical questions in probability focus on the probability of events and the average of certain quantities. Almost always, we answer such questions by identifying an appropriate random variable. We then decide how to characterize events in terms of that random variable, or how to express the quantity being averaged in terms of that random variable.

The examples of random variables related to a sequence of Bernoulli trials provide our first real example of this type of reasoning, and I think this transition is part of why the topic was difficult for many of you. First, we must remember that the random variable is not the same as the random experiment, but simply one of many different ways to summarize an aspect of that experiment. The experiment itself is characterized completely by the sequence of Bernoulli trials, each of which is going to be either a success or a failure. Random variables will be measurement that are related to these successes or failures, and we must choose an appropriate measurement that will allow us to answer the questions of interest.

On our quiz last week, I gave the following scenario: "Apples are packaged in 3-pound bags. Suppose that 4% of the time, the bag weighs less than 3 pounds. You select bags randomly and weigh them in order." In principle, there is an unlimited supply of bags of apples, and we indefinitely select a random bag and weigh it. If we were to weigh enough bags over a long enough time, we would find that the fraction that are underweight appears 4% of the time. However, for any particular number of weighings, we could have found any number of underweight bags. The random experiment is described by an infinite sequence of F's and U's, with an F if the bag was full and a U if the bag was underweight.

So now consider the first question: "What is the probability that you must weigh at least 20 bags before you find an underweight bag?" One method that we could use to do this is to directly understand the event in question. That is, we could create a tree diagram that would eventually give enough information to answer the question. Unfortunately, this tree diagram would be much to cumbersome to answer a question about the first 20 bags weighed: 2^20 different outcomes after 20 weighings. So we try to see if there is a random variable for this random experiment that has enough information to answer the question instead.

There are actually multiple ways to choose a random variable to answer the question. The best random variable is a geometric random variable, which counts the number of Bernoulli trials until we see the first success. Since our question considers when we find the first underweight bag, we define success for our purposes as weighing a bag as underweight. Thus, X counts the number of weighings until we find the first underweight bag. If the first bag is underweight, X=1. But if the first 5 bags are full and the 6th bag is underweight, X=6. Every path on our hypothetical tree diagram is associated with a particular value of the random variable. Since an underweight bag will be chosen with probability p=0.04, we use X~Geometric(p=0.04). Now, having chosen a random variable, we must determine how we answer the question in terms of that random variable. The event of interest, "weigh at least 20 bags before you find an underweight bag," corresponds to an event, "X is greater than or equal to 20". Thus, we wish to compute P[X≥20].

Another way that we could answer the question is to consider that finding an underweight bag for the first time on or after the 20th weighing means that the first 19 bags must have all been full. Consequently, we could answer our question using a Binomial random variable with n=19. So let X count the number of underweight bags out of the first 19 that are weighed. Again, a "success" is finding an underweight bag, so that X~Binomial(n=19,p=0.04). Our event can now be restated as saying that "X is equal to 0". But remember that the X in this paragraph is different from the random variable in the previous paragraph. For this random variable, our question will be answered by computing P[X=0].

The third question on the quiz asked, "What is the probability that you find the 5th underweight bag before you weigh 80 bags?" The best choice for a random variable that will answer our question is to count the number trials until you do find the 5th underweight bag. If we say that a trial is a success if the bag is underweight, then we are counting trials until the 5th success. That is, our random variable X is a negative binomial random variable with r=5 (the number of successes needed to stop counting) and p=0.04 (the success probability). We write this: X~Neg.Binom.(r=5,p=0.04). To answer the question, we must realize that the event of interest is to say that X<80. (We stop counting before we reach 80.) Thus, the answer would be P[X<80].

And of course, as I mentioned earlier, we could actually compute the probability using another type of random variable. For this problem, we must find another way to describe the event of interest. One way to do this is to realize that we find the fifth bag prior to the 80th weighing if there are at least 5 underweight bags by the time we weigh the 79th bag. This can be represented using a binomial random variable. Let our random variable X count the number of underweight bags in the first 79 weighed. Thus, X~Binomial(n=79, p=0.04). The probability we wish to compute is P[X>5].

In summary, to do well in probability, you will need to think probabilistically. Before you can actually compute a quantity (which usually involves fairly straightforward mathematics), you must identify a random variable and understand how to answer the question in terms of that random variable. Then you can use one of the appropriate functions to finally answer the question, whether it is a probability or an expectation.

Monday, February 4, 2008

Random Variables

There seems to be a lot of confusion in relation to random variables. Part of this has to do with students being pressed onto new subjects without necessarily understanding the previous material adequately. Part of this has to do with the plethora of new functions that are introduced in relation to these random variables (e.g., a random variable X is itself a function in the probability space, it has a p.m.f., a m.g.f., and a c.d.f.). And part of this confusion has to do with a variety of ways to compute things: probabilities, expectations, and moments. Before you allow yourself to be engulfed by the onslaught of waves of probability theory crashing down on you, take a breath (of air) and consider the following.

Probabilities most fundamentally describe random experiments. The probability space (or sample space) describes the possible outcomes of the experiments in as much detail as necessary to completely characterize that experiment. A random variable is a single numeric summary of the nature of the experimental outcome. There are typically many different outcomes of the experiment that can lead to the same value for the random variable. Furthermore, a single experiment can provide the information for many different random variables, each of which summarizes a different aspect of the experiment.

For example, suppose our experiment consists of rolling two standard 6-sided dice, one of which is red and the other is green. There are 36 distinct outcomes. We might be interested in the value of the red die, which value we could assign to a random variable R. We might instead be interested in the value of the green die, which value we could assign to a random variable G. Other values of interest might be the largest of the two values, the smallest of the two values, the sum of the values, the difference between the dice, the greatest common factor between the values, etc. The list could go on forever, with each summary value corresponding to a different random variable.

The random variables R and G are independent because the roll of one does not influence the other. They are also identically distributed. The probability mass function (p.m.f.) for the random variable describes the probabilities of individual values for R. We either need a formula for an arbitrary value or a table showing all of the values to describe this function. For this random variable, we have R(x)=1/6 for each of the values in the support S={1,2,3,4,5,6}. The expected value is defined as a sum over all possible values in the support For a single die roll (R or G), we have E[R] = 1/6(1)+1/6(2)+...+1/6(6) = 3.5.

For a single die roll (R or G), we have E[R] = 1/6(1)+1/6(2)+...+1/6(6) = 3.5.

Let us now consider another random variable, S, which represents the sum of the two values. That is, S=R+G. The support for S is the set {2,3,...,12}. We could compute the p.m.f. for this random variable: f(7)=6/36, f(6)=f(8)=5/36, f(5)=f(9)=4/36, f(4)=f(10)=3/36, f(3)=f(11)=2/36, and f(2)=f(12)=1/36. And we could compute the expected value of the random variable using the definition of mathematical expectation:

However, since S=R+G, we have a lovely little theorem that allows us to use a sum rule:

This is much easier than calculating using the definition.

So, we learn a lesson: if we can express a random variable as a sum of easier random variables, expected value may be more effectively calculated using these individual terms.

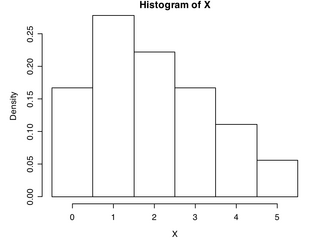

To continue, let us consider the distance between the two rolls. That is, we introduce a random variable X = |R-G| to represent the distance between the rolls. The smallest possible value for X is 0, which occurs when the dice are the same. The largest possible value for X is 5, which occurs when one die is 1 and the other is 6. So the support for X is the set S={0,1,2,3,4,5}. In this example, a table for the p.m.f. is much easier, computed based on the number of ways to obtain each distance:

f(0)=6/36, f(1)=10/36, f(2)=8/36, f(3)=6/36, f(4)=4/36, f(5)=2/36

For this problem, the random variable X is not easily expressed as a sum. So we must compute expected value using the original definition:

In summary, a random variable is a single number that summarizes some aspect of a random experiment. The p.m.f. of the random variable gives probabilities of individual outcomes. The expected value (or mathematical expectation) computes the average of a random quantity weighted by appropriate probabilities of those values.